HiPerGator Tutorial

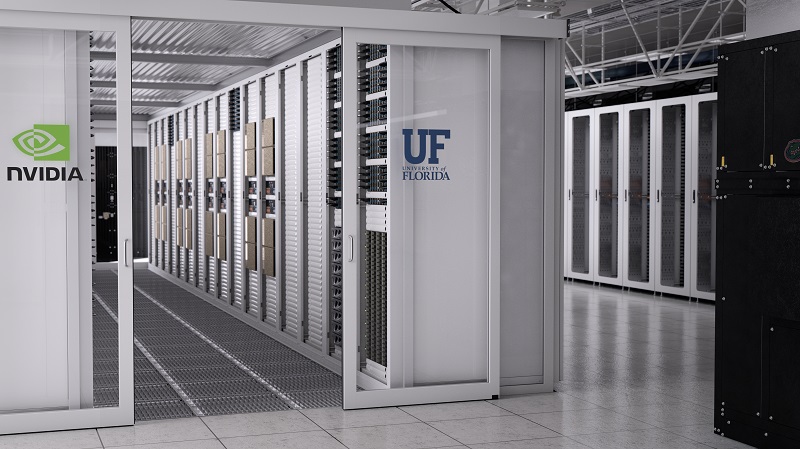

HiPerGator is the University of Florida’s 4th-generation supercomputer, featuring 63 DGX B200 nodes (504 NVIDIA Blackwell GPUs), 19,200 CPU cores, 600 NVIDIA L4 GPUs, and an 11 PB all-flash storage system, set for full production in Fall 2025.

This tutorial keeps your original simple, clean, and practical style — clear structure, minimal decoration, and easy-to-copy command sections, while highlighting key ideas and warnings with color.

1. Quick Start

This section guides you through the very basics of connecting to HiPerGator and running your first job.

1.1 Connecting to HiPerGator

There are two main ways to access HiPerGator:

1.1.1 SSH (command line access)

If you prefer the terminal, log in via SSH:

ssh <gatorlink_username>@hpg.rc.ufl.edu

Replace <gatorlink_username> with your UF GatorLink ID.

The first login may ask you to confirm the server fingerprint. DUO MFA is required.

Once logged in, you start in your home directory:

/home/$username

Tip: keep login node usage light — editing files, submitting jobs, checking status. Do not run heavy CPU/GPU workloads on the login node.

1.1.2 Open OnDemand (web interface)

For users who prefer a graphical interface, Open OnDemand offers browser-based remote access:

👉 https://ood.rc.ufl.edu

You can launch VNC-based GUI sessions, file explorers, and interactive jobs.

Note: The GUI provided by Open OnDemand is VNC-based, not a full desktop environment. Some GUI applications requiring hardware acceleration may not run properly.

1.2 Submit Your First Job

HiPerGator uses Slurm as the job scheduler. Heavy computations must not be run on the login nodes.

You have two main ways to run jobs:

- Batch jobs

- Interactive jobs

Batch Job Example

Create a Slurm script:

vim test_job.slurm

Paste:

#!/bin/bash

#SBATCH --job-name=Example

#SBATCH --mail-type=END,FAIL

#SBATCH --mail-user=your_email@ufl.edu

#SBATCH -p your_preferred_partition

#SBATCH --qos=your_qos

#SBATCH --nodes=1

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=3

# #SBATCH --gres=gpu:b200:4 # Uncomment if you need GPUs

#SBATCH --mem=16GB

#SBATCH --time=00:20:00

#SBATCH --output=my_first_job_%j.log

echo "Hello from HiPerGator!"

Key fields to check before submitting:

--job-name: short, descriptive job name--mail-user: use a valid email so you get notifications-p/--qos: must match the partition/QOS you are allowed to use--time: set a realistic time limit; too long can delay scheduling

Warning: Requesting more CPUs/GPUs/memory/time than your QOS allows may cause the job to be held or rejected.

Submit the job:

sbatch test_job.slurm

Check queue:

squeue -u $USERNAME

View output:

cat my_first_job_*.log

Interactive Job Example

Request an interactive session on a compute node:

srun --ntasks=16 --mem=64GB --gres=gpu:1 \

--partition=hpg-turin --time=00:20:00 \

--pty /bin/bash

Use this for: debugging, testing commands, running notebooks, or exploring the environment.

✓ At this point, you’ve successfully:

- Logged into HiPerGator

- Submitted a Slurm job

- Retrieved output

- Started an interactive session

You now have the full basic workflow from login → job submission → output checking → interactive work.

2.Tricks

Everything here keeps your original concise style — short notes, simple tips, fast lookup.

Module System (ml)

module load apptainer

module avail

module list

Tip: always load required modules inside your job script or interactive session, so the environment is correctly set up on the compute node.

Monitor your QOS / System Status

sacctmgr show associations where user=$USER format=account,user,partition,qos,grpcpus,grpmem,grpnodes

sinfo -s

After you get your account and groups, do:

sacctmgr show account Your_Account withassoc format=account,user,fairshare,grpcpurunmins,grpcpus,grpmem

SSH Tunnel (VS Code Remote Tunnels)

You can use VS Code Remote Tunnels to connect without manually forwarding ports:

👉 https://code.visualstudio.com/docs/remote/tunnels

On the HiPerGator login node:

code tunnel

Then connect from your local VS Code.

Warning: If you SSH from the login node directly into a compute node, running commands such as

nvidia-smiwill show all GPUs on the node. This happens because GPU isolation is enforced by Slurm, not by SSH.Avoid manually SSH-ing into compute nodes. Always use

srunorsallocso Slurm enforces proper GPU isolation.

Tip: keep the tunnel session open while you work.